In applications requiring extreme performance—like autonomous driving, tactical robotics, or aerial drones—servo response speed and control latency are mission-critical. While cloud computing is powerful, its latency due to data transmission is a bottleneck. GXServo solves this by embedding AI edge chips that perform on-device model inference and decision-making, achieving millisecond-level responsiveness.

I. Architecture of Edge AI Technology

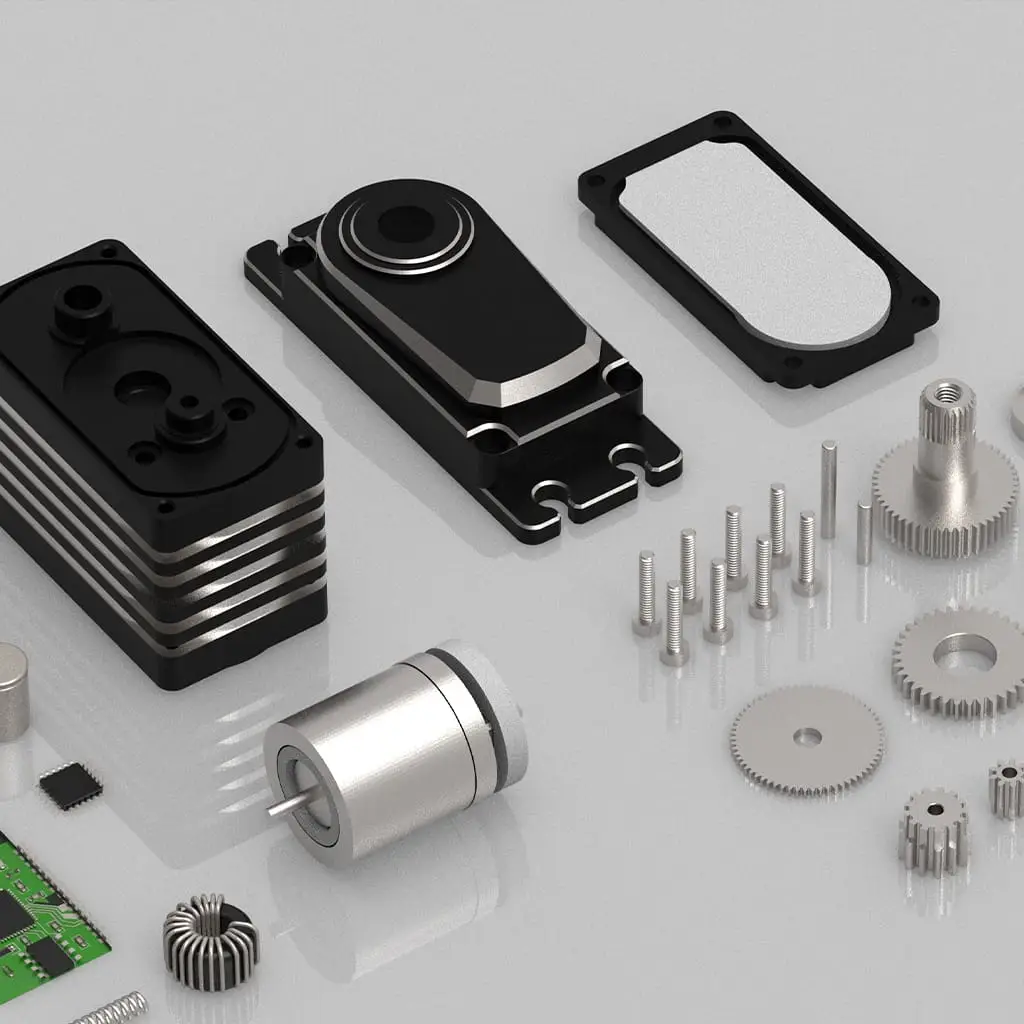

GXServo uses micro AI chips like NVIDIA Jetson Nano or Google Edge TPU built into its control board. Lightweight neural networks such as MobileNetV3 or Tiny-YOLO run locally, handling tasks like object detection and path recognition without the need to send data to the cloud.

II. Case Study: GXServo in Autonomous Vehicle Camera Adjustment

In an L4 autonomous driving project, GXServo adjusts camera gimbals and side mirrors based on real-time visual input. Previously, cloud-based processing introduced delays of over 300 ms. With AI edge computing, the delay was reduced to 42 ms—vastly improving safety and accuracy at high speeds.

III. Real-Time Control Scheduling

GXServo uses an asynchronous inference scheduling framework to allocate computing resources dynamically between AI tasks and traditional control logic. For example, visual recognition is prioritized over path correction. This ensures high throughput while maintaining low latency.

IV. Next-Level Goal: Autonomous Servo Systems with Edge Collaborative Networks

The future lies in autonomous servo systems capable of forming edge collaborative networks. Each GXServo unit not only controls itself but shares predictive data and real-time decisions with other units. This forms a decentralized, intelligent network that enables advanced behaviors like swarm robotics and real-time global optimization.